Chip Design Innovations for the Age of AI and Machine Learning

The digital age has witnessed tremendous advancement in Artificial Intelligence (AI) and Machine Learning (ML), two technologies transforming virtually every industry, from healthcare to finance to autonomous vehicles. However such complex systems require powerful and efficient hardware infrastructure to support them. Advanced chip design would play a central role in developing AI and ML, and enormous computational requirements must be fulfilled. This article is about the recent innovations in designing chips, how companies around the globe, particularly in India, have been pushing the frontiers of technology to meet AI and ML demands, and so on.

Whether you are a tech junkie or in the business of hardware, understanding what is happening in chip designs will give you an insight into how technology is evolving for the sake of AI.

- Evolution of Chip Designs in AI and ML

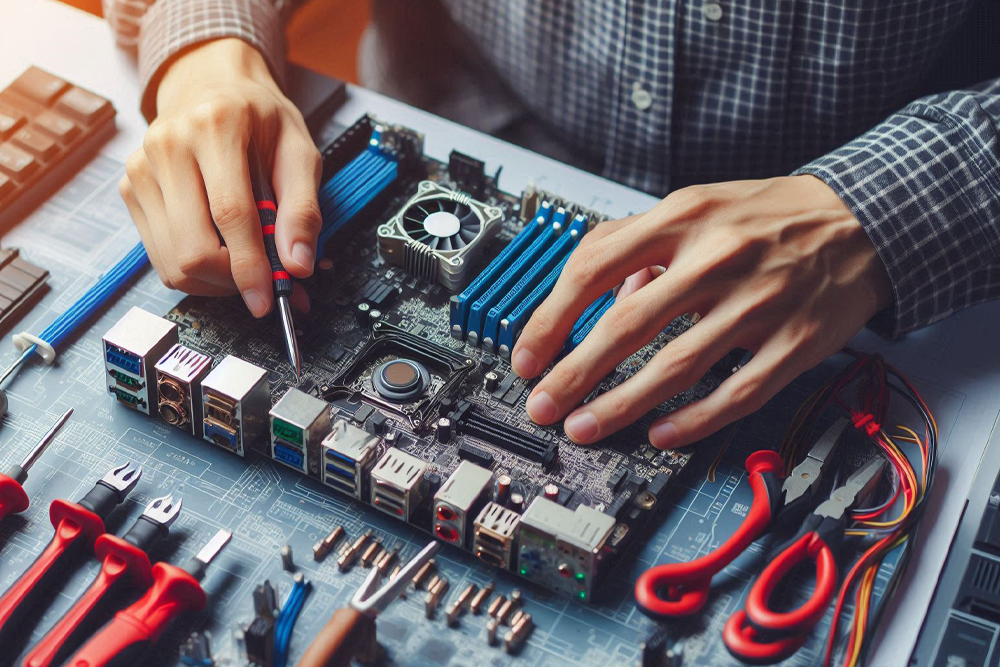

Chip design has evolved from simple microprocessors to highly specialized processing units optimized for the unique requirements of AI and ML. Unlike traditional CPUs, even GPUs, it is here that the real use of highly specialized Application-Specific Integrated Circuits (ASICs) and Field-Programmable Gate Arrays (FPGAs) could be truly appreciated. In this sense, they are custom-designed and fabricated to perform specific AI-related functions with minimal power consumption and maximum speed in the processing of complex neural networks.

Companies that are targeting efficiency, sustainability, and scalability in their AI operations are very keen on this degree of specialization. For instance, many businesses are going for ASIC design consultation to fine-tune the capabilities of a chip exactly to their applications of AI. With growing demands for AI technology, companies will have to turn to chips that best balance performance with cost if they have to be competitive.

Read more about how ASIC design consulting can enhance the performance of AI further at this link.

- Custom-built AI architectures: TPUs, FPGAs, and Beyond

The current chip architecture has fundamental flaws in meeting the demands of AI and ML workloads. There are new dedicated chips that are emerging like TPUs and neuromorphic chips, and which are positioned as the ultimate solutions to these challenges.

- Tensor Processing Units: TPUs from Google were specially developed to speed up the process of machine learning. This is achieved because of its capability to expedite matrix operations that serve as a foundation for structure in most AI models. Therefore, the TPUs greatly facilitate training models within less time with greater accuracy and hence have been valued enormously by heavy AI dependency-based industries, such as image and speech recognition.

- Neuromorphic Chips: These were inspired by the human brain and can be used to process information through architectures that closely resemble neural networks. Unlike standard linear processing, neuromorphic chips are structured to capture highly parallel computations typical of decision-making and pattern recognition, useful applications in robotics and real-time analysis.

- Field-Programmable Gate Arrays (FPGAs): FPGAs are another hardware advantage to AI and ML. As they are flexible, with the ability to be reprogrammed to perform a task or set of tasks that may change, FPGAs are suitable for most AI applications that need multiple model changes. Not nearly as specialized as ASICs, FPGAs represent the best compromise between flexibility and performance.

These specially designed chips offer unmatched benefits to users working in AI-driven industries in terms of speed as well as energy efficiency. Chipset manufacturing companies are now focusing on these special chips, and they are mainly customized according to the needs of the industry. Read more about these revolutionary technologies from a chipset manufacturing company in India.

3. PCB Design Advances: The Route to Complex AI Hardware

The PCB industry is also being modified according to the requirements of AI chipsets. Modern high-density interconnect PCB needs must be made to meet the need for AI chips, which are sometimes in thousands of connections. PCB design companies are seizing new materials and multi-layer architectures to enable both high-speed data transfer as well as efficient thermal management.

For example, HDI PCBs are now the selection for AI applications. More circuits per unit area are given by HDI PCBs and make them ideal for AI and ML hardware compact design requirements. In addition to that, with the advanced cooling solutions such as heat sinks and liquid cooling nowadays, the PCB is incorporated in the design with these types of cooling solutions to avoid overheating in high-performance operations.

PCB design companies in the modern age are also adopting AI tools in their workflow for rapid design, reducing errors’ chances. AI-based software automatically identifies and suggests improvements in PCB layouts. AI innovations can be fast-tracked by companies due to high design accuracy within faster production timelines.

Learn how advanced PCB design fuels AI innovations from a leading company in PCB design.

4. Edge AI Chips: Enabling Real-Time Intelligence on the Edge

The future of AI is moving toward processing power closer to where the data originates, or Edge AI. In this approach, AI chips are integrated directly into edge devices such as IoT sensors, autonomous vehicles, and smartphones, allowing companies to process data locally. This means less latency, increased data privacy, and real-time decision-making capabilities.

It is useful for image processing and anomaly search in real-time at the edge, independent of the cloud, in applications with very serious implications of latency, such as healthcare and manufacturing. Demand for Edge AI is high and driving chipset manufacturers to increasingly invest in specialized chipset solutions for the edge environment.

Chipset manufacturing companies that specialize in edge solutions are now growing in India and meet the requirements for low-power, high-efficiency edge chips from around the globe and in India.

For more information about the future of edge AI and its applications, check the developments of chipset manufacturing companies in India.

5. Advanced Manufacturing and AI Chip Innovation

This is not a trivial task. It requires a level of highly sophisticated chip-building capabilities from the kind of manufacturing technique silicon photonics or 3D stacking will allow. It could even make a difference between performance that is very high yet without an increase in either costs or energy consumption.

- Silicon Photonics: It transmits data using light and not electrical signals. In this way, silicon photonics achieves much higher data rates and lower power consumption. Silicon photonics is an essential requirement for data centers due to their high need for fast data transfer in AI workloads.

- 3D Stacking: One can stack the chips vertically with this technology, reducing its physical footprint, improving heat dissipation, and increasing transfer rates between chip layers for data. This technology greatly helps in AI applications since high integration is required by AI applications within compact setups.

The chipset manufacturing market in India is gaining momentum as companies are adopting advanced production techniques. It brings in the ability to cut the cost of production at high-quality chips.

Want to know more about advanced manufacturing and how it influences AI hardware?

6. Custom AI Chip Design: A New Opportunity for Startups

This high cost for general-purpose processors tends to keep small AI-focused startups limited. However, this landscape is slowly shifting as the trend of designing custom chips for AI applications continues to grow. The possibility of working with ASIC design consultants to develop custom chips based on their needs, allowing for the development of the necessary chip to fulfil specific AI needs while ensuring minimal expense on performance.

With the ability to design one’s own AI chips, companies can include security as well as optimize power consumption and even line up their hardware to particular AI models. This brings AI innovations directly to the marketplace without too much complexity on the part of a smaller business.

India chipset manufacturing companies and ASIC designers are also increasing their exposure. It provides opportunities for startups to exploit these services and scale their operations relatively cheaply.

Learn more about custom chip design as it enables a startup, through insight from ASIC Design Consultation.

7. Future Trends of Chip Design in AI — Quantum Computing and Heterogeneous Computing

Two very notable trends are moving us forward in the landscape of chip design, these being: Quantum Computing and Heterogeneous Computing.

- Quantum Computing: At present, quantum computing is still in its infancy, promising to be a future revolution for AI. Quantum chips may solve some very complex problems that no classical computers can and open new ways in data analysis and cryptography.

- Heterogeneous Computing: In heterogeneous computing, each different processor would have to collaborate, like in the case of CPU, GPU, or FPGAs, to share loads with the processing to achieve certain computational goals. This allows for easier processing in AI and more variable-technically to cover applications that range from simple image classification to complicated model training.

As quantum computing becomes more accessible and heterogeneous computing architectures advance, the future of AI chip design will push what’s possible. Here’s a company to keep an eye on: Nano Genius Technologies for more insight on AI hardware innovations and consulting services.

- What is an ASIC? How does it enhance the performance of AI hardware?

ASIC stands for Application-Specific Integrated Circuit. It’s a tailor-made chip specifically designed and tailored to execute specific sets of tasks rather than what general-purpose processors do best. ASICs optimize even processes like deep learning, significantly boosting performance.

- How is edge AI important for AI applications, and edge AI chips for AI software?

Edge AI refers to the processing of data directly on devices at the network edge, like IoT sensors, enhancing efficiency in real-time while maintaining data privacy and lesser dependence on cloud-based processing.

- What are neuromorphic chips? What is the difference between those and regular chips?

Since these mimic the neural architecture of a human brain, it best serves the requirement in terms of parallel processing with most AI applications: namely in pattern recognition and decision-making, which could typically exhibit better energy efficiency when contrasted to the chips themselves.